Publications

* denotes equal contribution and joint lead authorship.

Patents

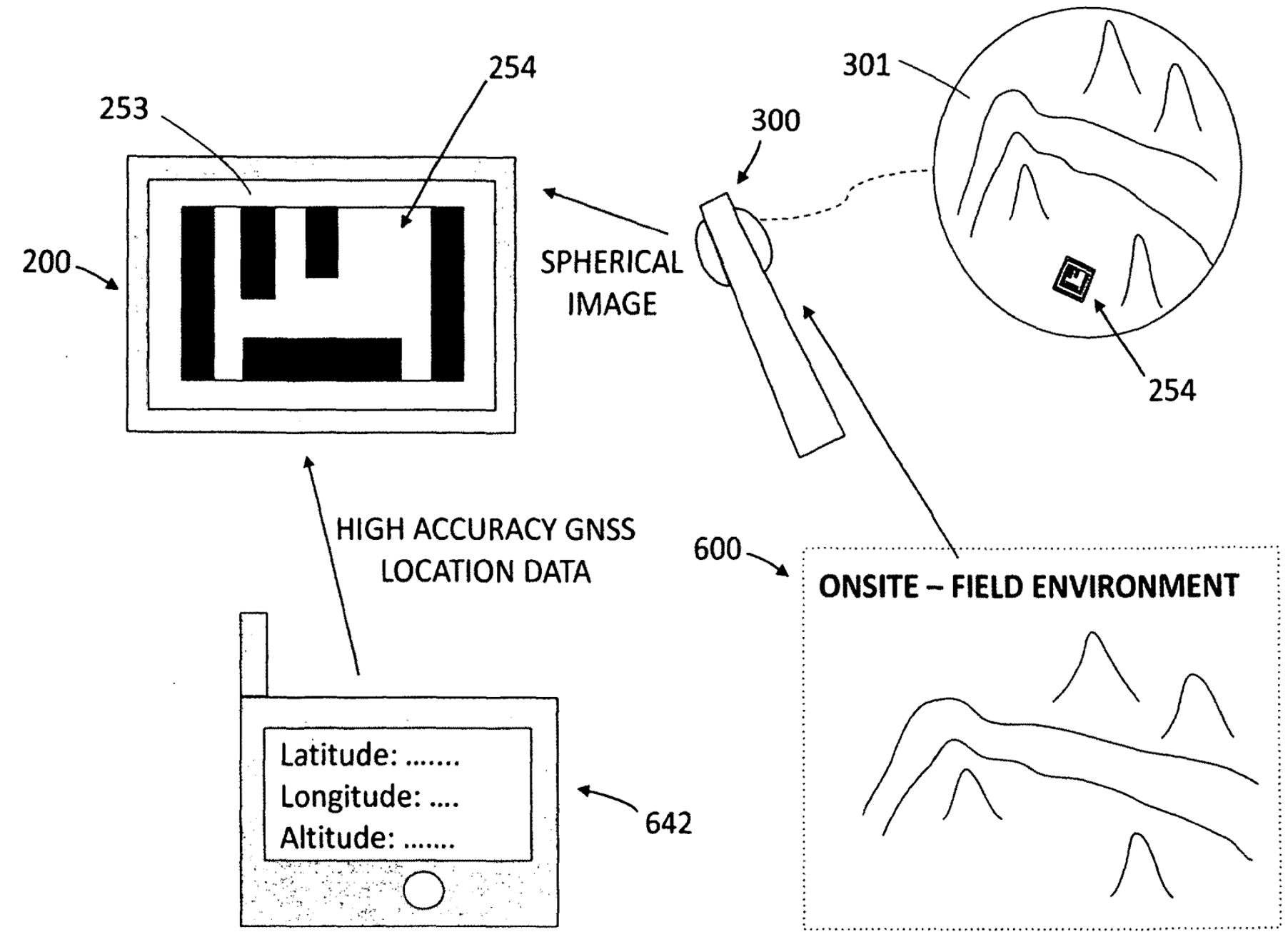

US20200389601A1

US20200389601A1

Spherical Image Based Registration and Self-Localization for Onsite and Offsite Viewing

United States Patent: US11418716B2, Active: August 16, 2022.

Systems and methods for image registration and self-localization for onsite and offsite viewing are provided. In one aspect, systems and methods for spherical image based registration and self-localization for onsite and offsite viewing, such as augmented reality viewing and virtual reality viewing, are provided. In one embodiment, a system includes a portable electric device fitted with a device camera, an external omnidirectional spherical camera, and a remote server. In one embodiment, a method of use employs a set of fiducial markers inserted in images collected by a spherical camera to perform image registration of spherical camera images and device camera images.

Under Review

ICRA

ICRA

Collaborative Scheduling with Adaptation to Failure for Heterogeneous Robot Teams

International Conference on Robotics and Automation (ICRA), Under Review, 2023

Collaborative scheduling is an essential ability for a team of heterogeneous robots to collaboratively complete complex tasks, e.g., in a multi-robot assembly application. To enable collaborative scheduling, two key problems should be addressed, including allocating tasks to heterogeneous robots and adapting to robot failures in order to guarantee the completion of all tasks. In this paper, we introduce a novel approach that integrates deep bipartite graph matching and imitation learning for heterogeneous robots to complete complex tasks as a team. Specifically, we use a graph attention network to represent attributes and relationships of the tasks. Then, we formulate collaborative scheduling with failure adaptation as a new deep learning-based bipartite graph matching problem, which learns a policy by imitation to determine task scheduling based on the reward of potential task schedules. During normal execution, our approach generates robot-task pairs as potential allocations. When a robot fails, our approach identifies not only individual robots but also subteams to replace the failed robot. We conduct extensive experiments to evaluate our approach in the scenarios of collaborative scheduling with robot failures. Experimental results show that our approach achieves promising, generalizable and scalable results on collaborative scheduling with robot failure adaptation. ICRA

ICRA

Decentralized and Communication-Free Multi-Robot Navigation through Distributed Games

International Conference on Robotics and Automation (ICRA), Under Review, 2023

Effective multi-robot teams require the ability to move to goals in complex environments in order to address real-world applications such as search and rescue. Multi-robot teams should be able to operate in a completely decentralized manner, with individual robot team members being capable of acting without explicit communication between neighbors. In this paper, we propose a novel game theoretic model that enables decentralized and communication-free navigation to a goal position. Robots each play their own distributed game by estimating the behavior of their local teammates in order to identify behaviors that move them in the direction of the goal, while also avoiding obstacles and maintaining team cohesion without collisions. We prove theoretically that generated actions approach a Nash equilibrium, which also corresponds to an optimal strategy identified for each robot. We show through extensive simulations that our approach enables decentralized and communication-free navigation by a multi-robot system to a goal position, and is able to avoid obstacles and collisions, maintain connectivity, and respond robustly to sensor noise. RAL

RAL

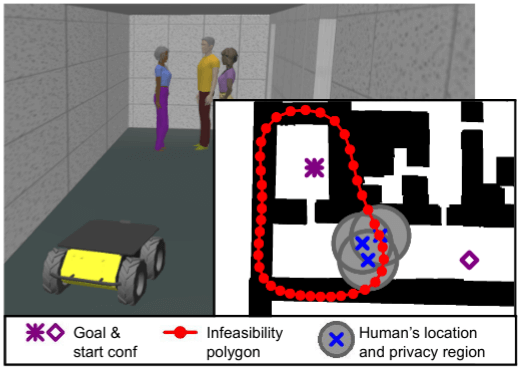

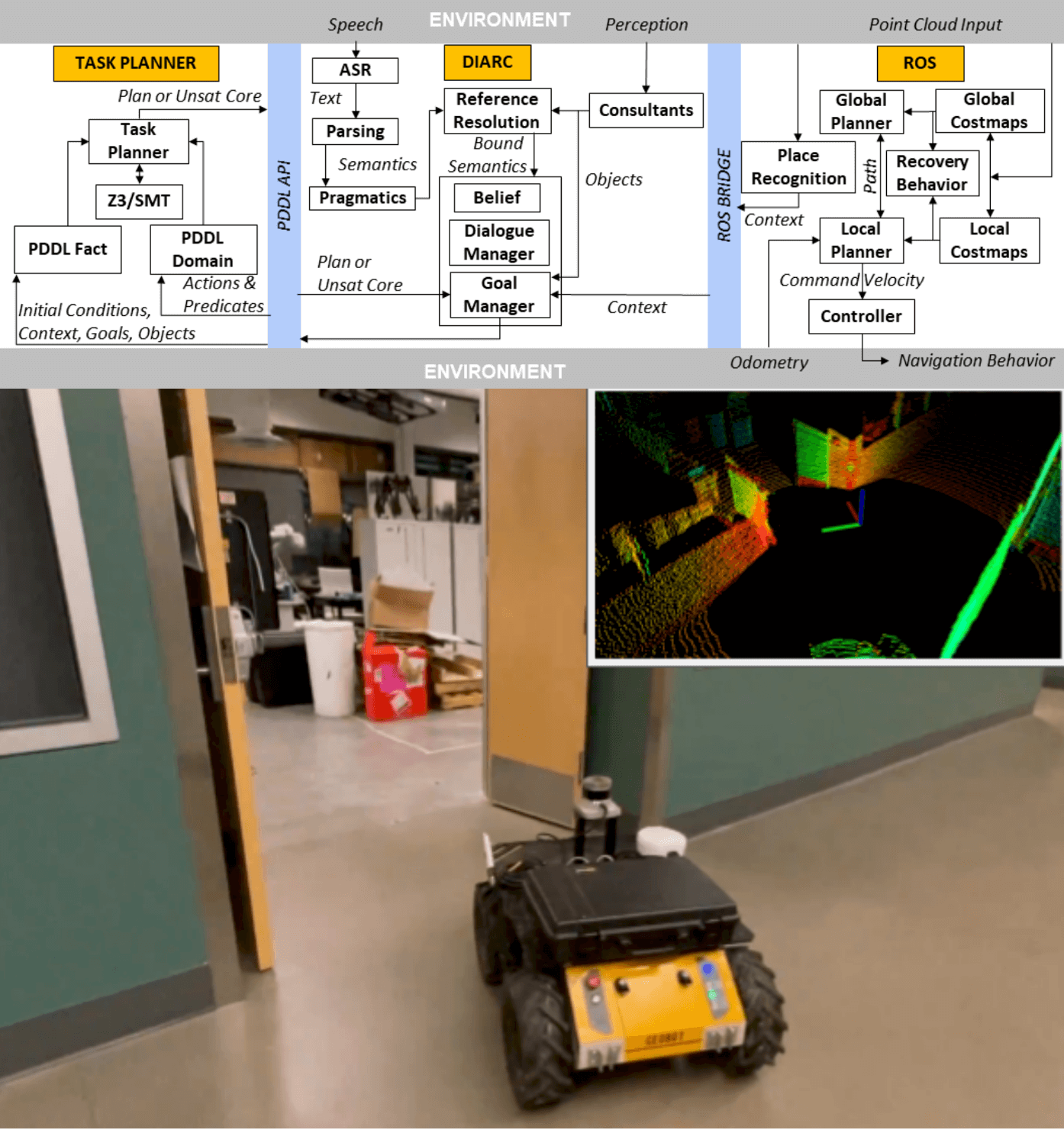

Command Rejection in Privacy-Sensitive Contexts: An Integrated Robotic System Approach

Submitted to: Robotics and Automation Letters (RAL), 2022

We explore how robots can consider privacy concerns during navigation tasks and reject navigation commands that cannot be achieved on privacy grounds. We present an integrated robotics approach to this problem, which combines a language-capable cognitive architecture (for recognizing intent behind commands), an object- and location-based context recognition system (for identifying the locations of people and classifying the context in which those people are situated) and an infeasibility proof-based motion planner (for identifying when commands cannot be achieved on the basis of contextually mediated privacy concerns). The behavior of this integrated system is validated using a series of experiments in a simulated medical environment.

IJRR

IJRR

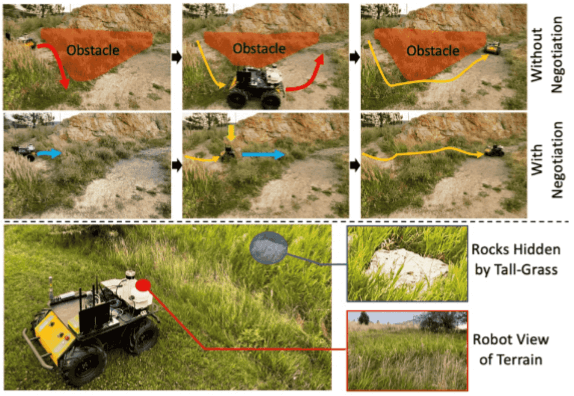

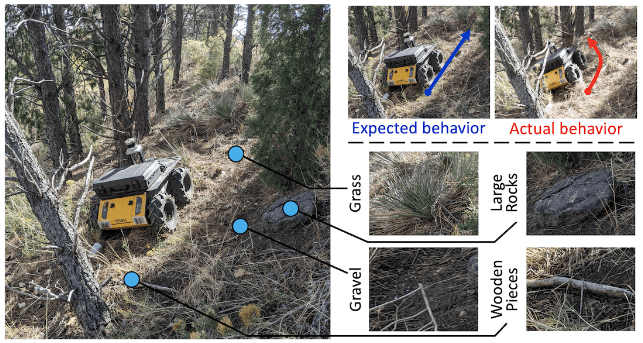

Self-Reflective Terrain-Aware Robot Adaptation for Consistent Off-Road Ground Navigation

Submitted to: The International Journal of Robotics Research (IJRR)

Ground robots require the crucial capability of traversing unstructured and unprepared terrains and avoiding obstacles to complete tasks in real-world robotics applications such as disaster response. When a robot operates in off-road field environments such as forests, the robot's actual behaviors often do not match its expected or planned behaviors, due to changes in the characteristics of terrains and the robot itself. Therefore, the capability of robot adaptation for consistent behavior generation is essential for maneuverability on unstructured off-road terrains. In order to address the challenge, we propose a novel method of self-reflective terrain-aware adaptation for ground robots to generate consistent controls to navigate over unstructured off-road terrains, which enables robots to more accurately execute the expected behaviors through robot self-reflection while adapting to varying unstructured terrains. To evaluate our method's performance, we conduct extensive experiments using real ground robots with various functionality changes over diverse unstructured off-road terrains. The comprehensive experimental results have shown that our self-reflective terrain-aware adaptation method enables ground robots to generate consistent navigational behaviors and outperforms the compared previous and baseline techniques.

Published Papers

IROS

IROS

NAUTS: Negotiation for Adaptation to Unstructured Terrain Surfaces

IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 2022

When robots operate in real-world off-road environments with unstructured terrains, the ability to adapt their navigational policy is critical for effective and safe navigation. However, off-road terrains introduce several challenges to robot navigation, including dynamic obstacles and terrain uncertainty, leading to inefficient traversal or navigation failures. To address these challenges, we introduce a novel approach for adaptation by negotiation that enables a ground robot to adjust its navigational behaviors through a negotiation process. Our approach first learns prediction models for various navigational policies to function as a terrain-aware joint local controller and planner. Then, through a new negotiation process, our approach learns from various policies' interactions with the environment to agree on the optimal combination of policies in an online fashion to adapt robot navigation to unstructured off-road terrains on the fly. Additionally, we implement a new optimization algorithm that offers the optimal solution for robot negotiation in real-time during execution. Experimental results have validated that our method for adaptation by negotiation outperforms previous methods for robot navigation, especially over unseen and uncertain dynamic terrains.

CoRL

CoRL

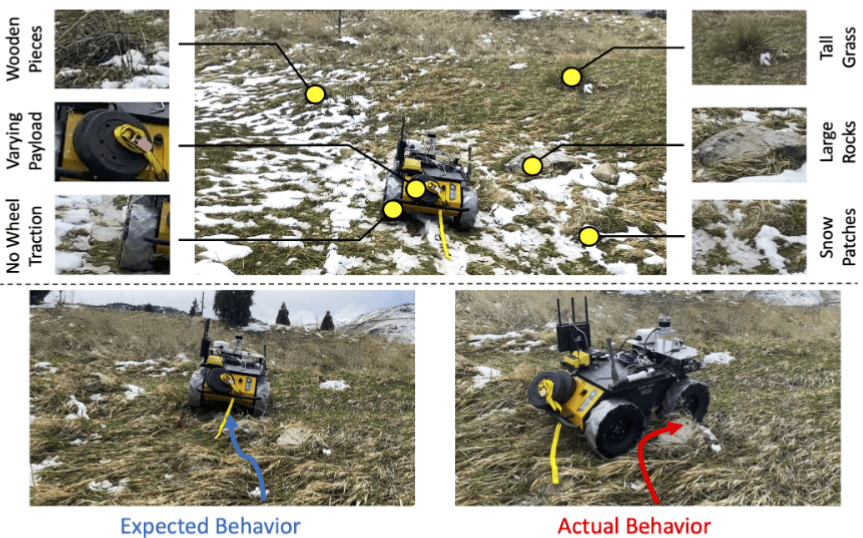

Enhancing Consistent Ground Maneuverability by Robot Adaptation to Complex Off-Road Terrains

Conference on Robot Learning (CoRL), 2021

Selected for Oral Presentation (6.5% acceptance)

Terrain adaptation is a critical ability for a ground robot to effectively traverse unstructured off-road terrain in real-world field environments such as forests. However, the expected or planned maneuvering behaviors cannot always be accurately executed due to setbacks such as reduced tire pressure. This inconsistency negatively affects the robot’s ground maneuverability and can cause slower traversal time or errors in localization. To address this shortcoming, we propose a novel method for consistent behavior generation that enables a ground robot’s actual behaviors to more accurately match expected behaviors while adapting to a variety of complex off-road terrains. Our method learns offset behaviors in a self-supervised fashion to compensate for the inconsistency between the actual and expected behaviors without requiring the explicit modeling of various setbacks. To evaluate the method, we perform extensive experiments using a physical ground robot over diverse complex off-road terrain in real-world field environments. Experimental results show that our method enables a robot to improve its ground maneuverability on complex unstructured off-road terrain with more navigational behavior consistency, and outperforms previous and baseline methods, particularly so on challenging terrain such as that which is seen in forests.

IROS

IROS

An Integrated Approach to Context-Sensitive Moral Cognition in Robot Cognitive Architectures

IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 2021

Finalist for Best Paper Award on Cognitive Robotics

Acceptance of social robots in human-robot collaborative environments depends on the robots' sensitivity to human moral and social norms. Robot behavior that violates norms may decrease trust and lead human interactants to blame the robot and view it negatively. Hence, for long-term acceptance, social robots need to detect possible norm violations in their action plans and refuse to perform such plans. This paper integrates the Distributed, Integrated, Affect, Reflection, Cognition (DIARC) robot architecture (implemented in the Agent Development Environment (ADE)) with a novel place recognition module and a norm-aware task planner to achieve context-sensitive moral reasoning. This will allow the robot to reject inappropriate commands and comply with context-sensitive norms. In a validation scenario, our results show that the robot would not comply with a human command to violate a privacy norm in a private context.

AAAI

AAAI

Enhancing Ground Maneuverability Through Robot Adaptation to Complex Unstructured Off-Road Terrains

Association for the Advancement of Artificial Intelligence (AAAI) Spring Symposium Series, 2021

While a robot navigates on complex unstructured off-road terrains, the robot's expected behaviors cannot always be executed accurately due to setbacks such as wheel slip and reduced tire pressure. In this paper, we propose an approach for enhancing robot's maneuverability by consistent behavior generation that enables a ground robot's actual behaviors to more accurately match expected behaviors while adapting to off-road terrain. Our approach learns offset behaviors that are used to compensate for the inconsistency between the actual and expected behaviors without explicitly modeling various setbacks. Experimental results in complex unstructured off-road terrains demonstrate the superior performance of our proposed approach to achieve consistent behaviors. IJRR

IJRR

Robot Perceptual Adaptation to Environment Changes for Long-Term Human Teammate Following

The International Journal of Robotics Research (IJRR), pages: 0278364919896625, 2020

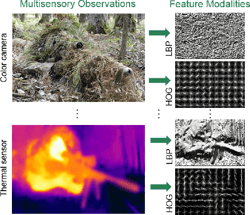

Perception is one of the several fundamental abilities required by robots, and it also poses significant challenges, especially in real-world field applications. Long-term autonomy introduces additional difficulties to robot perception, including short- and long-term changes of the robot operation environment (e.g., lighting changes). In this article, we propose an innovative human-inspired approach named robot perceptual adaptation (ROPA) that is able to calibrate perception according to the environment context, which enables perceptual adaptation in response to environmental variations. ROPA jointly performs feature learning, sensor fusion, and perception calibration under a unified regularized optimization framework. We also implement a new algorithm to solve the formulated optimization problem, which has a theoretical guarantee to converge to the optimal solution. In addition, we collect a large-scale dataset from physical robots in the field, called perceptual adaptation to environment changes (PEAC), with the aim to benchmark methods for robot adaptation to short-term and long-term, and fast and gradual lighting changes for human detection based upon different feature modalities extracted from color and depth sensors. Utilizing the PEAC dataset, we conduct extensive experiments in the application of human recognition and following in various scenarios to evaluate ROPA. Experimental results have validated that the ROPA approach obtains promising performance in terms of accuracy and efficiency, and effectively adapts robot perception to address short-term and long-term lighting changes in human detection and following applications.-

IROS

IROS

Voxel-Based Representation Learning for Place Recognition Based on 3D Point Clouds

IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 2020

Place recognition is a critical component towards addressing the key problem of Simultaneous Localization and Mapping (SLAM). Most existing methods use visual images; whereas, place recognition using 3D point clouds, especially based on the voxel representations, has not been well addressed yet. In this paper, we introduce the novel approach of voxel-based representation learning (VBRL) that uses 3D point clouds to recognize places with long-term environment variations. VBRL splits a 3D point cloud input into voxels and uses multi-modal features extracted from these voxels to perform place recognition. Additionally, VBRL uses structured sparsity-inducing norms to learn representative voxels and feature modalities that are important to match places under long-term changes. Both place recognition, and voxel and feature learning are integrated into a unified regularized optimization formulation. As the sparsity-inducing norms are non-smooth, it is hard to solve the formulated optimization problem. Thus, we design a new iterative optimization algorithm, which has a theoretical convergence guarantee. Experimental results have shown that VBRL performs place recognition well using 3D point cloud data and is capable of learning the importance of voxels and feature modalities. RSS

RSS

Robot Adaptation to Unstrucuted Terrains by Joint Representation and Apprenticeship Learning

Robotics: Science and Systems (RSS), 2019

When a mobile robot is deployed in a field environment, e.g., during a disaster response application, the capability of adapting its navigational behaviors to unstructured terrains is essential for effective and safe robot navigation. In this paper, we introduce a novel joint terrain representation and apprenticeship learning approach to implement robot adaptation to unstructured terrains. Different from conventional learning-based adaptation techniques, our approach provides a unified problem formulation that integrates representation and apprenticeship learning under a unified regularized optimization framework, instead of treating them as separate and independent procedures. Our approach also has the capability to automatically identify discriminative feature modalities, which can improve the robustness of robot adaptation. In addition, we implement a new optimization algorithm to solve the formulated problem, which provides a theoretical guarantee to converge to the global optimal solution. In the experiments, we extensively evaluate the proposed approach in real-world scenarios, in which a mobile robot navigates on familiar and unfamiliar unstructured terrains. Experimental results have shown that the proposed approach is able to transfer human expertise to robots with small errors, achieve superior performance compared with previous and baseline methods, and provide intuitive insights on the importance of terrain feature modalities. VAMR

VAMR

Scalable Representation Learning for Long-Term Augmented Reality-Based Information Delivery in Collaborative Human-Robot Perception

International Conference on Virtual, Augmented and Mixed Reality (VAMR), 2019

Augmented reality (AR)-based information delivery has been attracting an increasing attention in the past few years to improve com- munication in human-robot teaming. In the long-term use of AR systems for collaborative human-robot perception, one of the biggest challenges is to perform place and scene matching under long-term environmental changes, such as dramatic variations in lighting, weather and vegetation across di erent times of the day, months, and seasons. To address this challenge, we introduce a novel representation learning approach that learns a scalable long-term representation model that can be used for place and scene matching in various long-term conditions. Our approach is formulated as a regularized optimization problem, which selects the most representative scene templates in di erent scenarios to construct a scalable representation of the same place that can exhibit signi cant long-term environment changes. Our approach adaptively learns to select a small subset of the templates to construct the representation model, based on a user-de ned representativeness threshold, which makes the learned model highly scalable to the long-term variations in real-world applications. To solve the formulated optimization problem, a new algo- rithmic solver is designed, which is theoretically guaranteed to converge to the global optima. Experiments are conducted using two large-scale benchmark datasets, which have demonstrated the superior performance of our approach for long-term place and scene matching. RSS

RSS

Metacognitive Reasoning of Perceptual Inconsistency for Illusion Detection

Robotics: Science and Systems (RSS), Workshop paper, 2018

When autonomous robots operate in adversarial environments, such as in tactical battlefields, they may face various misinformation attacks, such illusion and deception, by potential adversaries. Different from conventional reactive design that reacts through analyzing the effects of attacks and developing countermeasures, we propose the insight of an active design by anticipating the adversary via investigating potential attacks. Several techniques were developed in traditional adversarial applications to defend against misinformation attacks, including data sanitization and model improvement to protect against causative attacks, and classifier randomization, nearoptimal evasion protection, and robust feature selection to defend against exploratory attacks. However, previous methods are not capable of addressing the challenges in robot perception in illusive and deceptive scenarios. This work aims at improving the robustness of robot perception against illusion in data-rich environments with multisensory high-dimensional observations. Specifically, in this workshop paper, we introduce a metacognitive reasoning approach for robots to reason about consistency of multisensory perception data in order to detect illusion ICRA

ICRA

Omnidirectional Multisensory Perception Fusion for Long-Term Place Recognition

IEEE International Conference on Robotics and Automation (ICRA), 2018.

Over the recent years, long-term place recognition has attracted an increasing attention to detect loops for largescale Simultaneous Localization and Mapping (SLAM) in loopy environments during long-term autonomy. Almost all existing methods are designed to work with traditional cameras with a limited field of view. Recent advances in omnidirectional sensors offer a robot an opportunity to perceive the entire surrounding environment. However, no work has existed thus far to research how omnidirectional sensors can help long-term place recognition, especially when multiple types of omnidirectional sensory data are available. In this paper, we propose a novel approach to integrate observations obtained from multiple sensors from different viewing angles in the omnidirectional observation in order to perform multi-directional place recognition in longterm autonomy. Our approach also answers two new questions when omnidirectional multisensory data is available for place recognition, including whether it is possible to recognize a place with long-term appearance variations when robots approach it from various directions, and whether observations from various viewing angles are the same informative. To evaluate our approach and hypothesis, we have collected the first large-scale dataset that consists of omnidirectional multisensory (intensity and depth) data collected in urban and suburban environments across a year. Experimental results have shown that our approach is able to achieve multi-directional long-term place recognition, and identifies the most discriminative viewing angles from the omnidirectional observation. ICRA

ICRA

Robot Adaptation to Environment Changes in Long-Term Autonomy

IEEE International Conference on Robotics and Automation (ICRA), Workshop paper, 2018.

Long-term autonomy introduces new challenges to robot perception, due to long-term changes of robot operation environments. In this paper, we propose learning-based approach that adapts robot perception according to environment variations. Our approach jointly performs feature learning, multisensory fusion, and adaptation under a unified regularized optimization framework. To evaluate the performance of our approach, we collected a dataset from physical robots in the underground and field environments. Experimental results have shown that our approach enables robots to adapt to long-term environment changes.

Posters

POSTERS ICRA POSTER

ICRA POSTER

Multisensory Internal Pipe Threat Prediction Using Inline Inspection Robots

IEEE International Conference on Robotics and Automation (ICRA), Abstract-Only Poster, 2018.

ICRA POSTER

ICRA POSTER

Fast Deployment of Multi-Robot Autonomy in Underground Environments

IEEE International Conference on Robotics and Automation (ICRA), Abstract-Only Poster, 2018.

-