Research

I envision autonomous robotic systems that adapt at a near-human level with short-term and long-term variations while operating in uncertain and potentially adverse unstructured environments.

Accordingly, my research is focused on enabling lifelong autonomy in robots via learning representations, via learning behavior adaptation and also via leanring self-reflection. I consider behavior adaptation and self-reflection as essential embodiments of intelligent robots, that while operating in a unstructured environment are able to reason about themselvess and the environment, and accordingly adjust their behaviors without requiring human supervision. Thus, my work follows the robot learning paradigm, where I used inspirations from cognitive reflection for human adaptation and awareness, and propose machine learning models that allow robots to make informed decision in time-, safety- and mission-critical applications in unstructured and potentially adverse environments. I use formal methods from the domain of combinationatorial optimization, robotics, computer vision, machine learning, and deep learning to advance my research.

Check out my full list of publications.

Lifelong Autonomy

Robots are becoming an integral part of human civilization. As a result, over the past several years, autonomous mobile robots have been more commonly used in unstructured field environments to address real-world applications, including disaster response, infrastructure inspection, homeland defense, and subterranean and planetary exploration. For the vision of completely intelligent robots to materialize, robots must have lifelong autonomy, i.e., their ability to autonomously operate over long periods (e.g., over days, weeks, seasons, or even years). More importantly, during these long periods, robot's environment can experience unpredictable gradual or/and radical changes. As such these long-term challenges add an extra dimension to the fundamental robotics problems in perception, planning, navigation, and SLAM, making them more difficult to achieve.

Lifelong Autonomy via Learning Representations

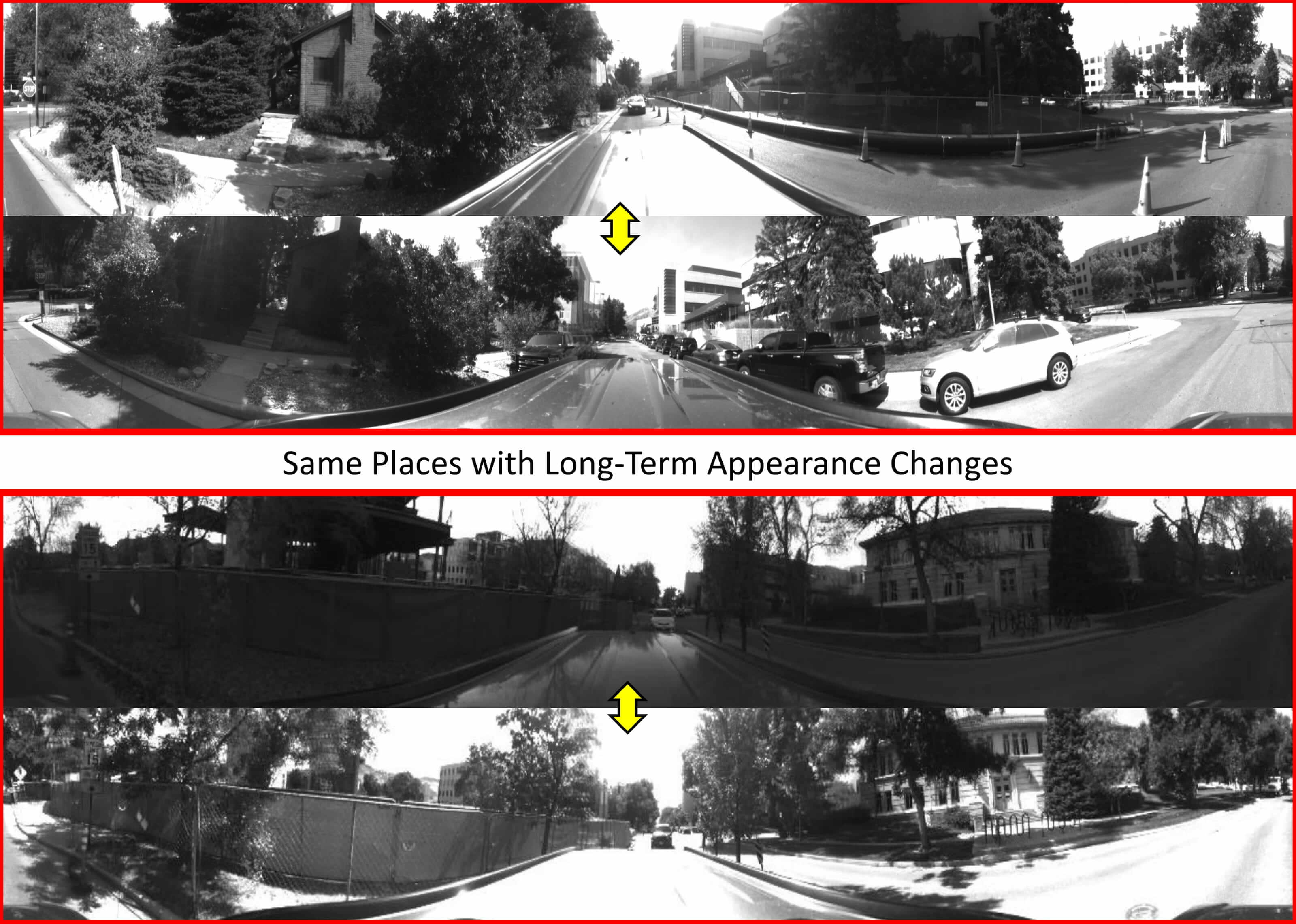

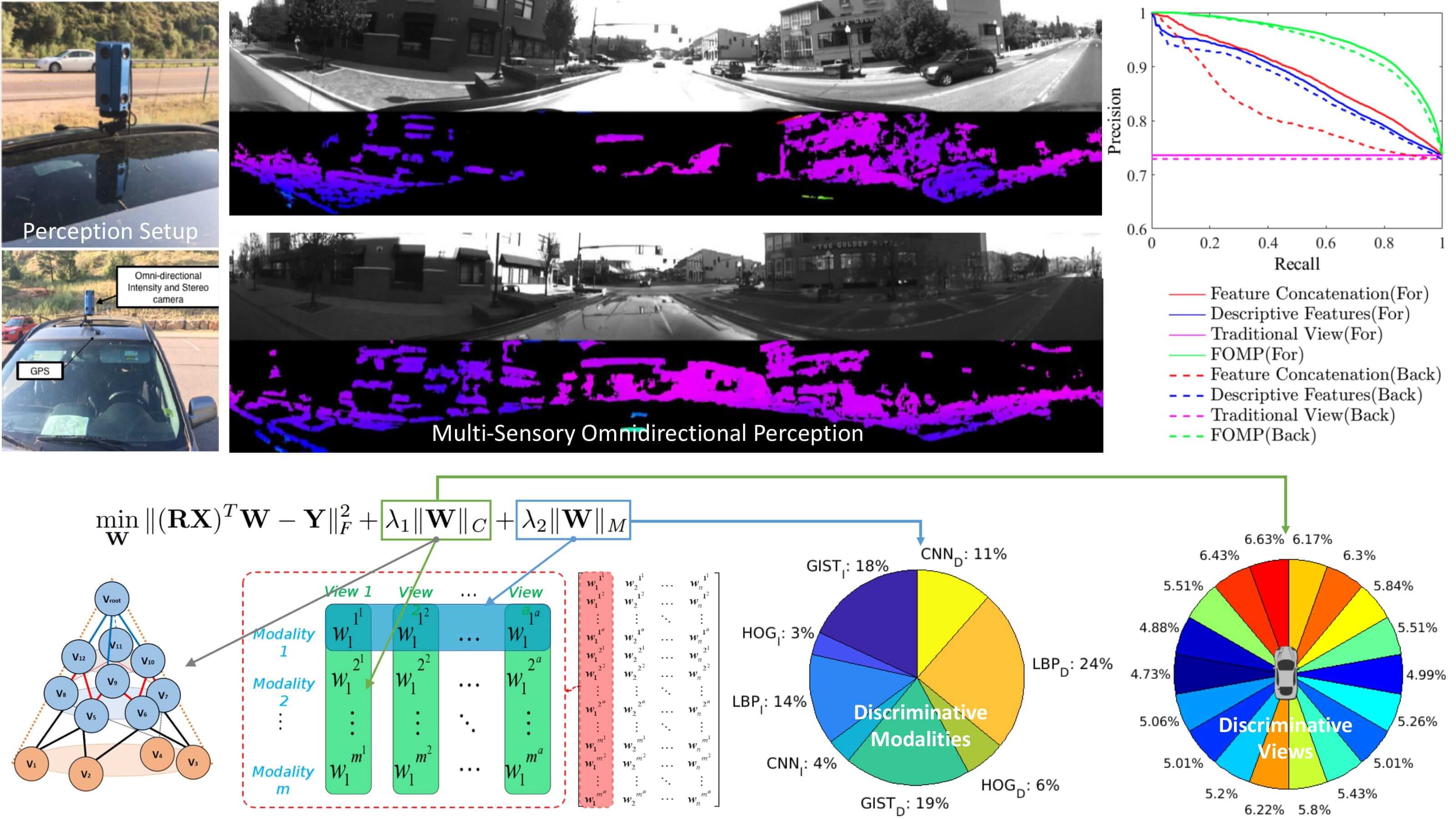

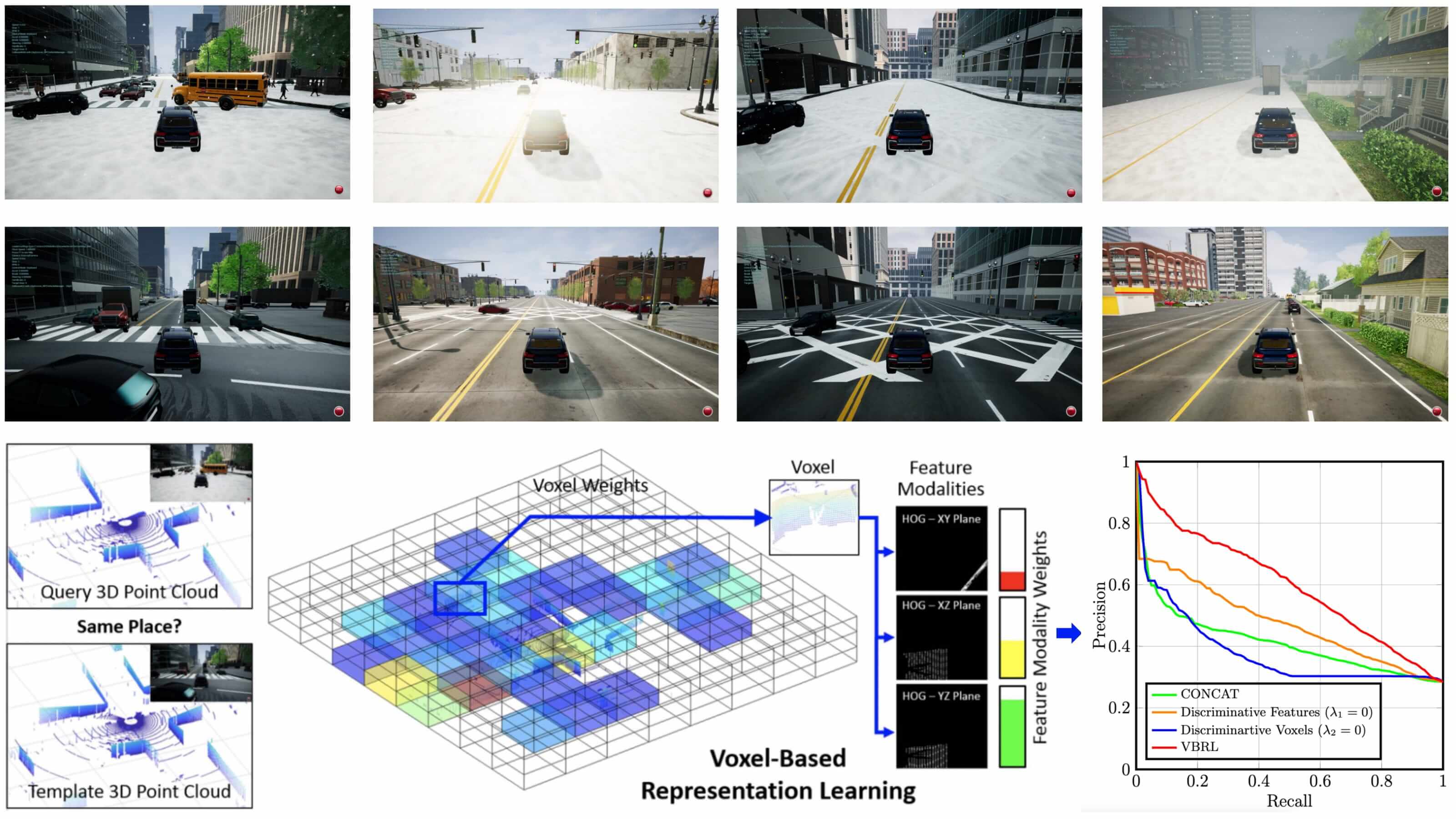

The performance of robots in long-term operations heavily relies on the design of data representations. My research aims at developing representations that make it easier for robots to capture the environmental metadata capable of denoting any environment while begin robust to long-term changes and appearance variations. Subsequently, I use formal methods from convex optimization and sparsity-inducing regularized optimization to recognize and analyze representations useful for robot autonomy in long-term settings. Specifically, through my research, I introduce several real-time methods that help understand the most-important views, feature modalities and/or sensor modalities for effectively carrying out lifelong robot operations.

Also, I demonstrated that these learned representations can be integrated with robot's real-time decision making capabilities (e.g., navigation) to further enhance lifelong-autonomy in unstructured environment. My research efforts on learning representations have contributed to the effectiveness of autonomous navigation and exploration, SLAM in GPS-denied environments, or localization in autonomous driving under varying lighting and vegetation conditions in dynamic environments.

Lifelong Autonomy via Robot Adaptation

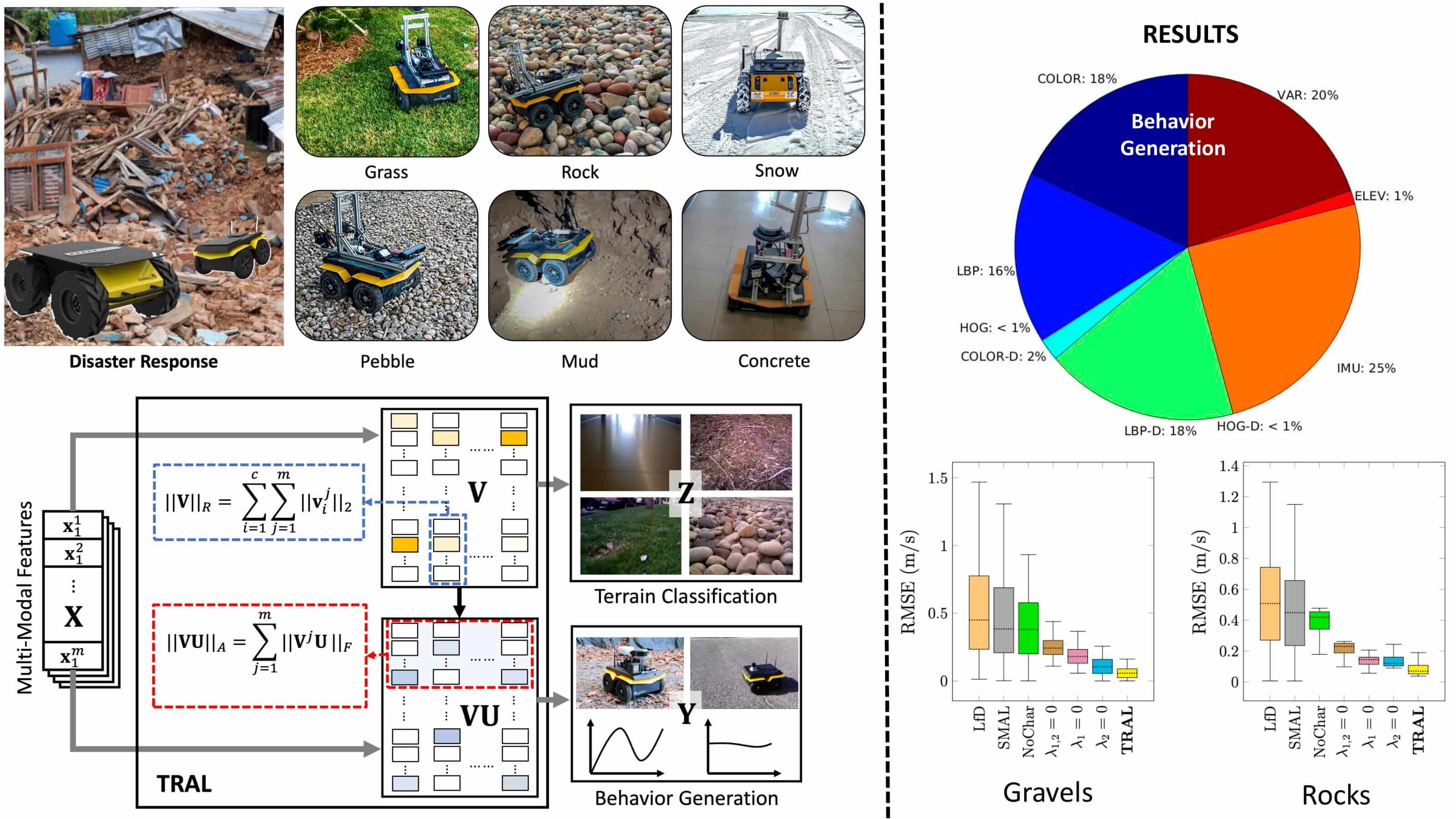

Change and novelty are two of the most significant challenges that prevent robots from achieving lifelong autonomy. When mobile robots are deployed in real-world field environments on time- and in safety-critical missions like disaster response, search and rescue, and reconnaissance missions, their capability of adapting their behaviors to unstructured environments is essential for safe, effective, and successful robot operation. Without effective adaptation, lifelong robots will inevitably fail, jeopardizing their missions and surrounding environments. As opposed to robot learning (where robots gain a skill from skill or gradually change their attitude), my research on robot adaptation for lifelong autonomy allows a robot to change its behaviors to adjust to gradually or drastically changing unstructured environments on the fly.

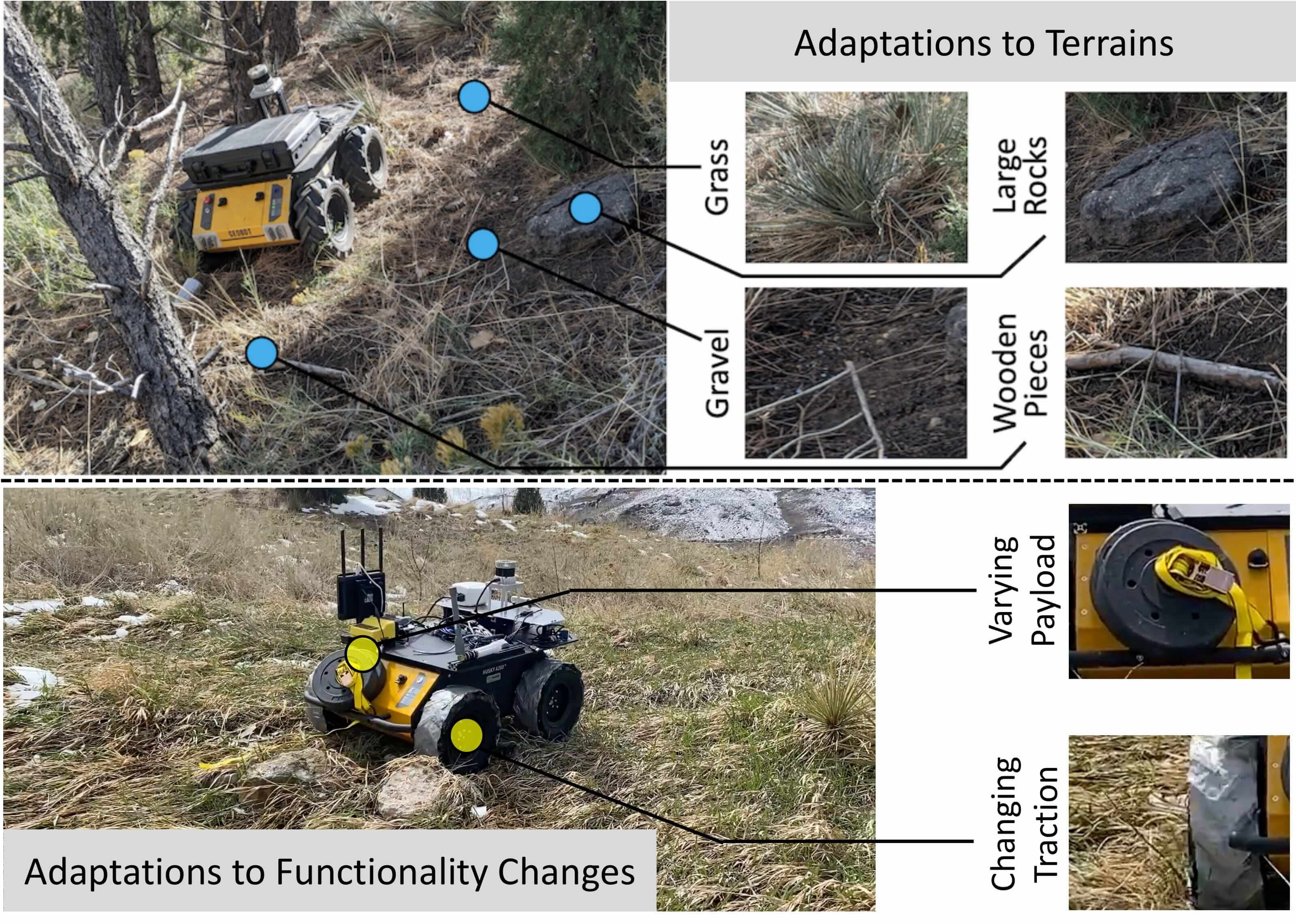

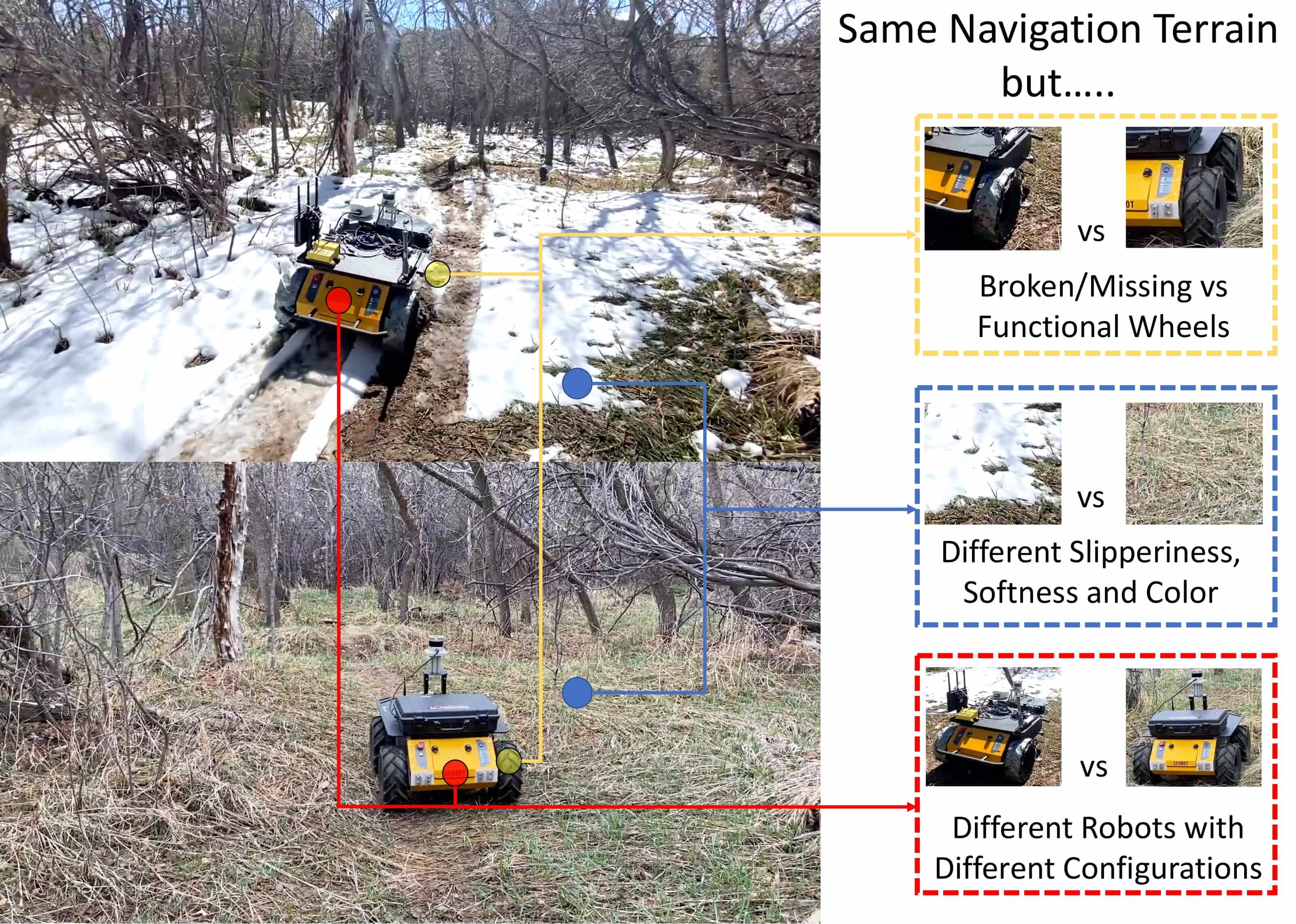

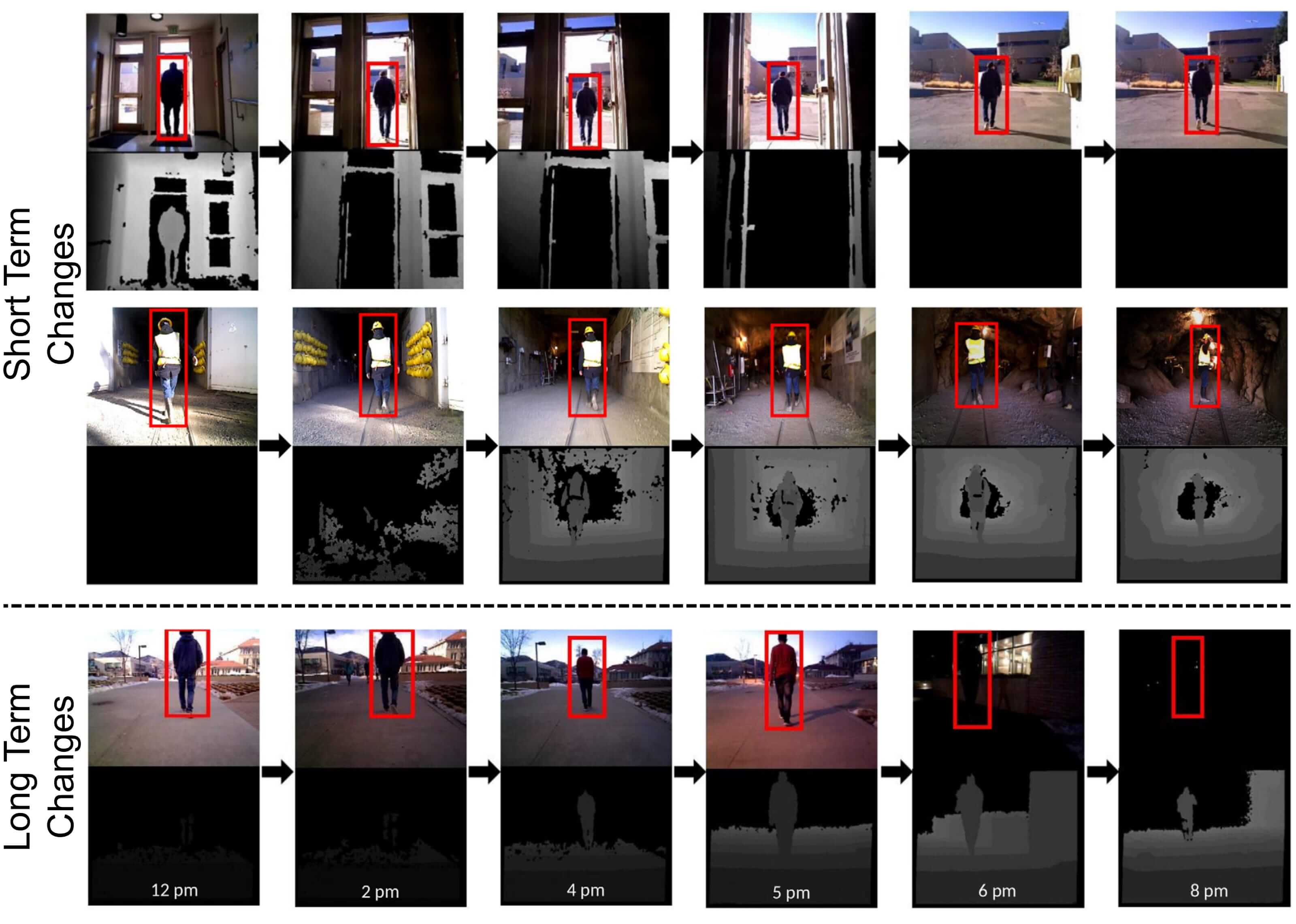

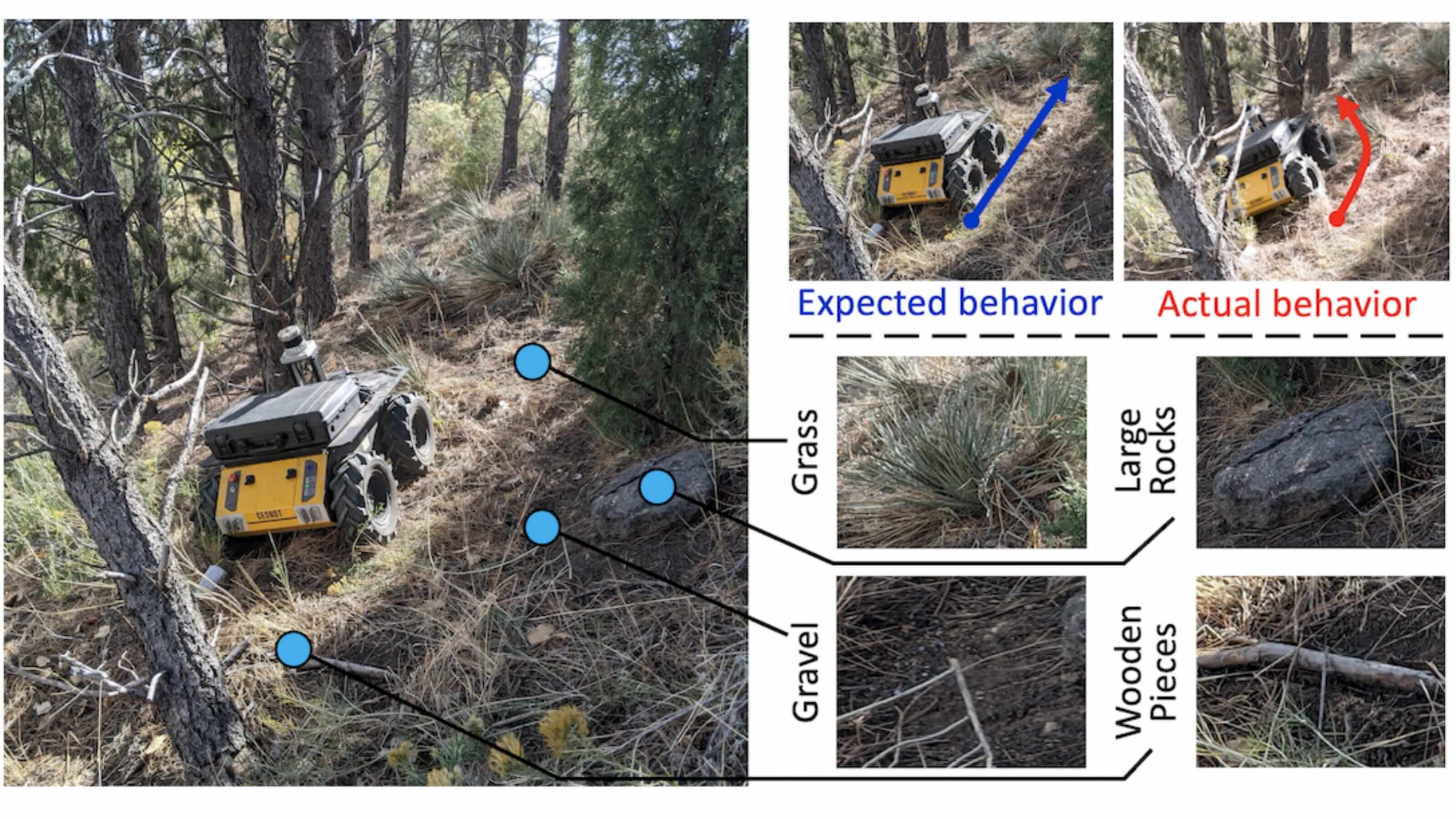

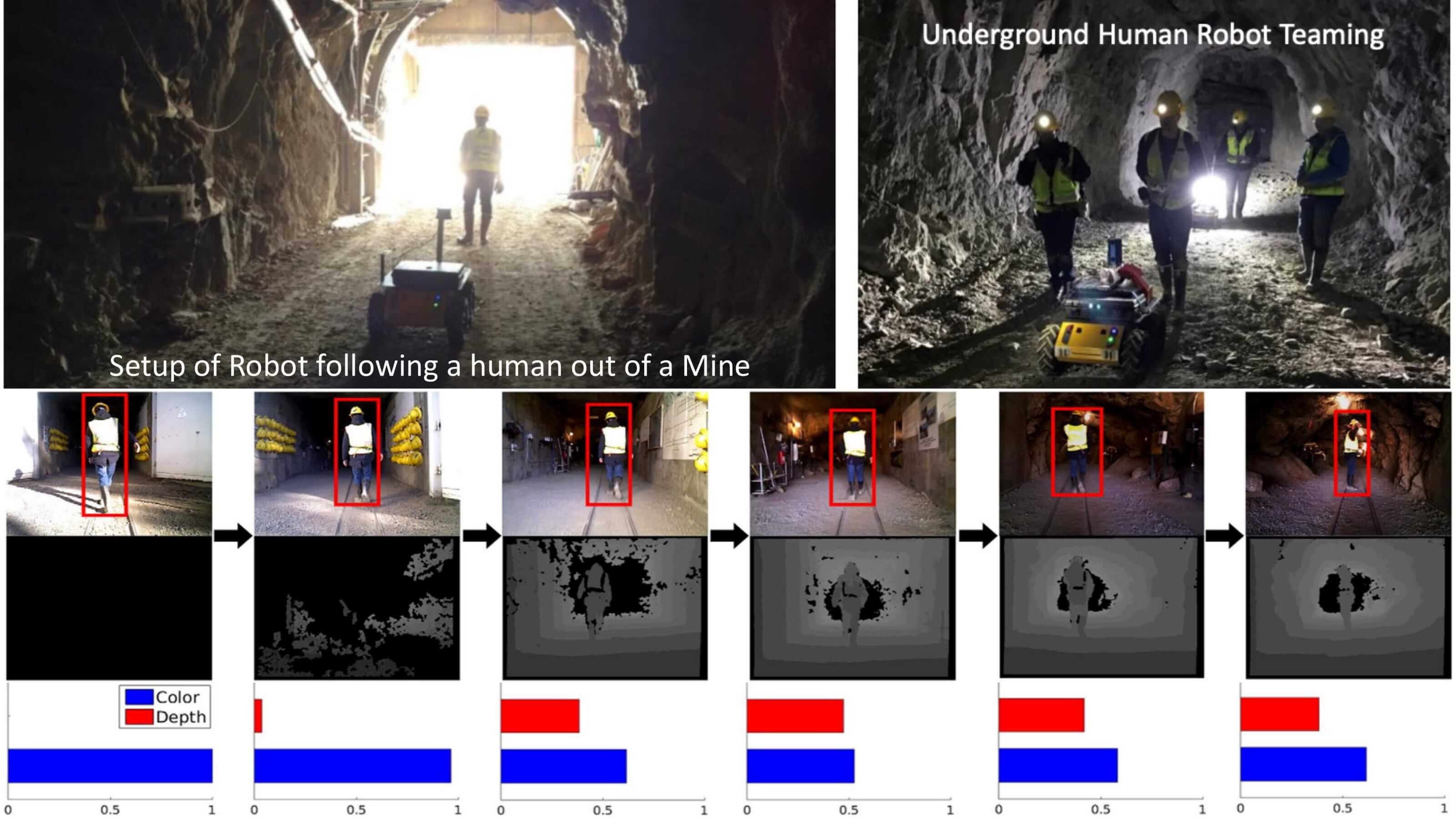

My research on learning robot adaptation is motivated by the need that robots, when operating in time-critical operations, cannot learn optimal behaviors over time; instead should adapt instantaneously. I use paradigms from learning from demonstrations, representations learning, and self-supervised learning to formulate the adaptation process as a structural regularized convex optimization problem. Specifically, my research answers how robots can use joint representation and apprenticeship learning for adaptive terrain traversal or how robots can enhance ground maneuverability to compensate for the mismatch between expected and observed behaviors caused due to unexpected setbacks in an unstructured environment. Furthermore, from the perspective of perception, I proposed a human-inspired method of perceptual adaptation that addresses the problem of adapting a robot's multisensory perception during short- and long-term robot teaming operations. This approach allows a robot to adjust its learned perception model and improves accuracy and efficiency. My research on robot adaptation through rigorous field testing has advanced the state-of-the-art in long-term autonomy by achieving a higher success rate and efficiency in various autonomous robot operations over short- and long periods.

Lifelong Autonomy via Robot Reflection

Advancing my research to achieve lifelong autonomy, I use inspirations from cognitive reflection for human adaptation and awareness and propose a novel robot reflection paradigm. The goal of my research on robot reflection I develop approaches that allow a robot to be aware and reason about (i) itself, (ii) its environment, and (iii) its interaction with the environment. Through this process of reflection, robots can adjust their adaptation process without requiring human supervision and, more importantly, reflect on their behaviors to improve their performance over their lifetimes.

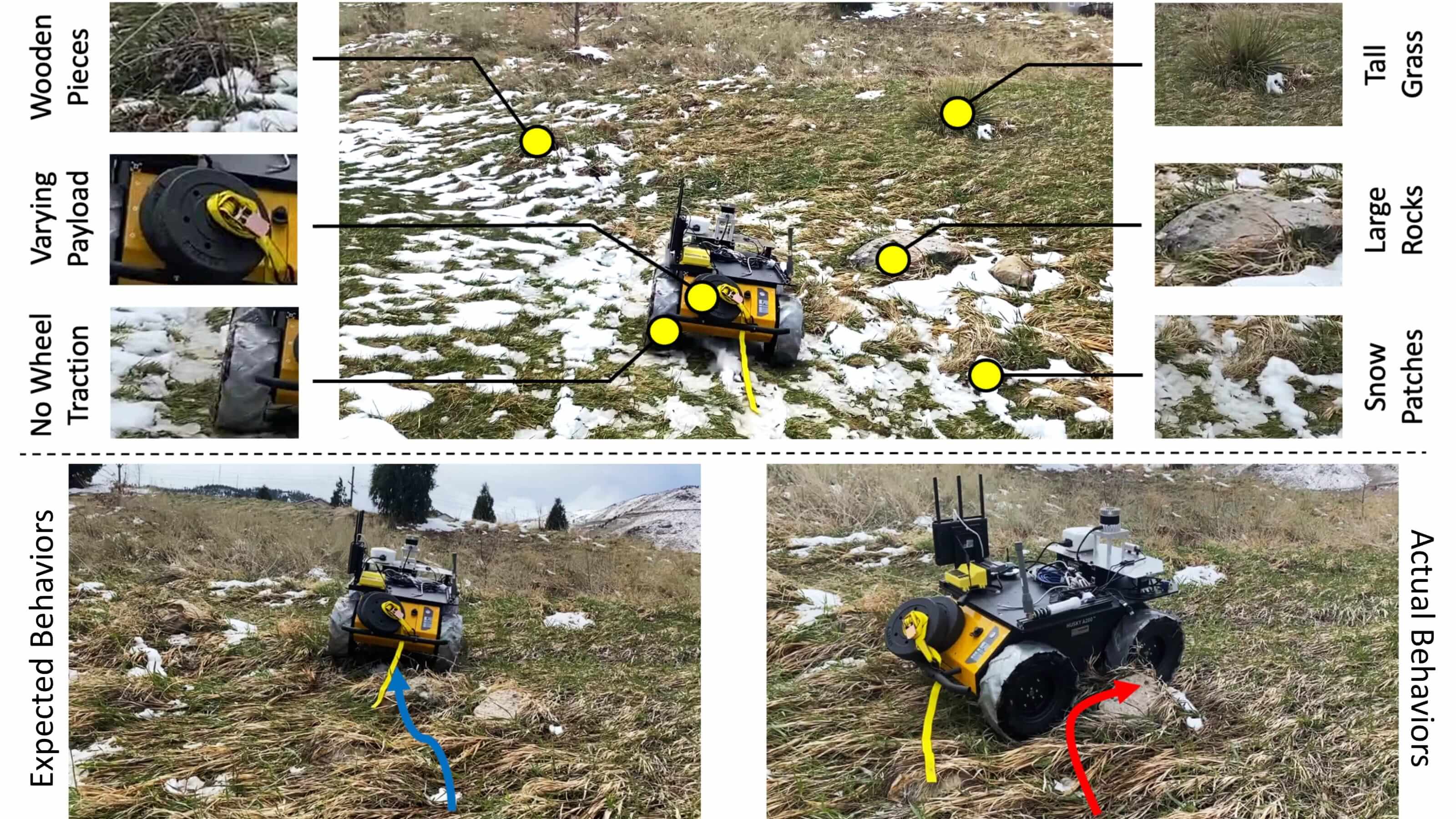

Towards enabling self-reflection, I have developed robot learning approaches that enable robots to negotiate with terrain, understand its characteristics, and adapt their present and future behaviors by dynamically combining multiple policies for navigation. Furthermore, in this research, I develop terrain adaptation methods that enable a ground robot to adapt to changes in terrain and robot functionalities (e.g., broken wheels or offset payload). Through rigorous field testing in various settings, my research on robot reflection has advanced state-of-the-art long-term autonomy by achieving a higher success rate and efficiency in various autonomous robot operations over short- and long durations. Specifically, adapting to unseen and uncertain environments without requiring additional training data, avoiding catastrophic forgetting, or using a fixed reward-based policy (like in RL). At present, I am working towards robot perceptual adaptation in the presence of sensory obscuration or failures and how the robots can handle combined failures in perception and actuation.